Just a short note to say that May 2009 was my first month with more than 1,000 visitors and over 2,000 page views. A big step forward for my blog – thank you all! It seems that I owe a lot of the additional attention this month to my post announcing a new attack on SHA-1.

Sunday, May 31, 2009

Sunday, May 17, 2009

The Sub-Time Crisis in Web 2.0

A few months ago I came across Steve Rubel's post on Attention Crash, where he predicts an imminent bursting of the Web 2.0 information bubble since there is no Moore's Law of exponential growth for our attention. This was a provocative remark, which I worked through more thoroughly in Moore's Lore and Attention Crash. Actually, I spent a few days off my laptop scribbling down various ideas and observations on paper, whatever came to mind. I surprised myself with filling 12 pages in a few hours on a Sunday afternoon and a few train rides. Some of the smaller asides will find their way into other posts, like the The Restaurant at the End of the Web. Here I will continue with the Attention Crash theme.

The worst case scenario for Web 2.0 is that we are heading for a singularity, precipitated by dividing our attention into informational units effectively rated at zero content. Using available streaming and aggregation tools we can quickly and effortlessly create an overwhelming flood of information. It is clear that we are building social computing structures that we do not comprehend. Social computing joins two areas that have the potential for massive scaling - computing technology and social interaction. The Sub-Time crisis lies at this intersection. In fact it is the intersection.

After reading Rubel's original post I subscribed to his blog and even his FriendFeed, and soon found that while he is concerned about Attention Crash, he is also a major contributor to the pot of Web 2.0 tidbits (links, comments, posts, pictures and so on). He is typical of other Web 2.0 luminaries who produce content but then also produce “content” on there being too much content.

But is there really a coming Attention Crash? Well certainly not informational equivalents to the Great Crash of 1929 or the Subprime crisis we are currently experiencing. If there is such a thing it will be very unevenly distributed and localised. It is clear that certain people are going to get rain on their personal web parades, but this is not global deluge.

When I was a boy my father regularly asked me “stimulating” questions. He belonged to the generation of Australian men for whom intense argument over inconsequential matters was considered both relaxing and invigorating. Apart from the perennial favourite of "How long is a piece of string?", usually recounted when someone asked how much does a reasonable quality car cost (I come from a long line of car people), and "Did you know you can sink a tractor in a lake of average depth of 1 inch?", he did ask me one time "What is the difference between a crash and a collision?" And the answer is that a collision involves two moving objects (say two cars) while a crash involves one moving object and one stationary object (say a car hitting a tree).

So when we speak of Attention Crash we may think of our information intake hitting up against our stationary capacity to absorb it. This capacity varies from person to person, but there are always hard bounds.

But as with the Great Crash, Rubel is probably using Attention Crash to mean a general or systemic collapse - an event that is widespread, foundational and severe. But the coming Attention Crash will not be of this form - there will be enough localised warnings. Unlike our current financial structures, the web is not at threat of collapsing, though many foot soldiers will fall by the way (they see themselves as pioneers, but in fact they are easily replaced). Our informational structures are not hierarchical but relational, and as such, are much more resilient to the removal of individuals. It is not the case that there are eager underlings waiting to replace leaders – the underlings are here and functioning already.

Web 2.0 losses will largely go unnoticed. New users/readers, whose information experience begins today, are being added constantly. They are essentially unaware of what happened last month and will remain that way. Joining is not a generational wait, no corporate ladder to be climbed. Everyone joins and progresses simultaneously. This turnover goes largely unnoticed since leavers are dwarfed by joiners.

Returning to Rubel, his tips on handling Web 2.0 overload are not very helpful - know what you want, be selective, apply the same ruthlessness that you do to your inbox. In short, organise your way out of the Web 2.0 glut. But this advice is nothing much more than platitudes. If at some point you used to receive 20 emails a day, whatever method you used to process those messages is probably not going to help you when you start receiving 50, 100 or 200 emails a day. But perhaps we are not really talking about scale here. If you buy a car for 4 people and then try to transport 50, this is not a matter of scale but just simply miscalculation.

The tools we have are simply the wrong ones. But ironically we seem to rely on web 2.0 to solve the problem it has created. This is surely like hoping that the fast food industry will take steps to help their customers lose weight. The point is that we face a disinterested informational adversary, that for the foreseeable future operates in a scale-free environment. In the information battle we have some sharp spears with occasional impressive horseback riding. However our adversary has the power to create a data mushroom cloud. Your best fallout shelter is abstinence.

Saturday, May 16, 2009

Rethinking Thresholds for Account Lockouts

One of my colleagues informed me that Conficker caused quite a few password lockout of administrator accounts at his company. The worm used a list of about 200 strings to perform a quick password-guessing attack on privileged accounts. Unless an account had no lockout policy set, then such accounts would be either compromised or locked out by Conficker. We can add DoS to the list of Conficker achievements.

But its not just Conficker that is locking users out of their accounts – in fact, users can do that all by themselves. We all know that quite a few help desk calls are simply requests for password resets of infrequently used applications, or even for frequently used applications where our recall has inexplicably failed us. NetWrix estimates that 30% of all help desk calls are for password resets. One is tempted to think that security policy is more concerned with keeping help desk people occupied than realistically addressing password guessing attacks.

Automatic account lockout is a counter-measure (or control in modern security parlance) for detecting and preventing password guessing attacks. Setting the account lockout threshold to be a small number such as 3 or 5 attempts is part of the conventional wisdom of security. The less number of permitted password guessing attempts the better. But we need to strike some balance here between our own unreliable memories and the persistence of hackers.

As is often the case, a little notation will help the discussion. Let’s assume that a policy defines N possible passwords, which we may represent as

A given user U will select these passwords according their personal preferences, and let the probability distribution

denote these preferences. Lastly let’s assume for simplicity that the passwords are ordered such that

which just means that password P1 is the mostly likely choice of the user with probability p1, password P2 is the next most likely choice with probability p2, and so on.

Now if we have a 3-strikes-you’re-out lockout policy then what does this mean in terms of our probabilities? Well, assuming the attacker follows the preferences of the user, then the policy states that we are prepared to live with three password guesses with a success of

but not with four password guesses with a success of

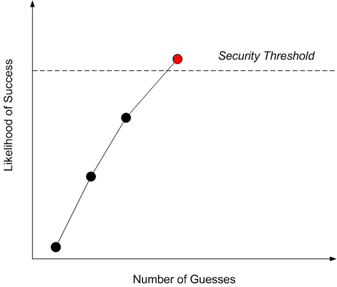

So the critical value here is p4 since it tips the scale from what is acceptable to what is not acceptable. We can represent this state of affairs in the diagram below.

But are our password policies really so brittle that we cross a security threshold from allowing 3 to 4 password guesses? I don’t think so. There is a threshold but it is certainly higher than 3 guesses.

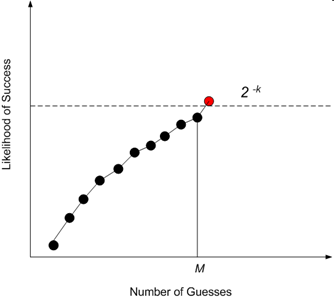

I recently blogged about the approach taken by NIST to this issue. Their formulation of the problem was to find the smallest value of M for which

which leads to the following graph

That is, the NIST approach is to tolerate M password guesses as long as the likelihood of success is less than the threshold 2^{-k}. The particular values chosen for NIST were k = 10 (1 in 1,000) and k = 14 (1 in 16,000), depending on the desired level of security. It is challenging to compute the exact value of M but NIST has some estimates based on deploying policies which guarantee a minimum amount of entropy in user passwords (see Appendix 1 of this document).

AES-256 and Reputational Risk

Reputational risk is something that everyone understands, particularly businesses who regard their brand as one of their most critical assets. As there is considerable trust in the security of AES-256 both in the public and commercial sectors, reputational risk to AES-256 has a very high impact, and we therefore hope, a very low likelihood of occurrence.

But I will argue in this post that the likelihood of reputational damage to AES-256 is far from low, and perhaps even quite high. AES-256 keys are so large that it is next to impossible to argue that a cryptanalyst will not be able to find some shortcut to recovering keys that saves time over exhaustive search – simply because the cryptanalyst has so much time to play with.

For example, an attack that requires 2^{200} operations is a “huge” saving over the 2^{255} operations for a full exhaustive search of a 256-bit key space. But is a cryptanalyst with a computational arsenal of 2^{200} operations any more of a credible threat than one armed with 2^{255} operations? I think not. But the 2^{200} attack will seem quite menacing since it “saved” a massive 2^{55} operations, and there will be reputational damage to AES-256.

In short 256-bit keys are simply not defensible within the framework of traditional notions of security. I have been intending to post on this topic for sometime, and thanks to Eric Rescorla’s post for prompting me to action.

Let's start by talking about absolute and relative breaks on ciphers. Absolute breaks (like the name suggests) signal the end-of-life for a cipher, while relative breaks signal improvements over exhaustive search. Reputational risk for a cipher starts gaining momentum in the latter case.

Absolute Breaks

By an absolute break we mean, for a family of ciphers or for a cipher at a given key length, a known method to efficiently recover keys. We can measure efficiency in several ways. RSA, like other public key systems, represents a family of ciphers since key generation can be scaled to an arbitrary size. RSA keys can have lengths of 512, 1024 or 2048 bits, and even larger lengths in principle. In practice computational cost is the limiting factor that prevents public keys from becoming arbitrarily large. So to break a public key cipher in some absolute sense we require an attack that works efficiently for all potential key sizes. Or put anther way, an absolute break prevents the cipher designer from simply escaping the attack by increasing the key length.

Many of the early public key systems based on the knapsack problem (including the famous Merkle-Hellman system) were broken efficiently for all key sizes by applying the LLL basis reduction algorithm (you can read a detailed history here). Knapsack ciphers have essentially disappeared from the cryptographic landscape, as their reputation has been damaged beyond repair. Another example of an absolute break is Shor's algorithm, which assuming a large scale quantum computer, can factor an RSA N-bit modulus in time proportional to N^3 operations. Since doing an RSA decryption today requires N^3 bit operations we can say that Shor's algorithm breaks RSA in time proportional to what it costs to setup up an SSL session today. Now we may be very far away from having quantum computers, but even the remote threat of such a device has caused enduring reputational damage for popular public key ciphers in use today.

Absolute Breaks for Symmetric Ciphers

The other way to demonstrate an absolute break of a cipher is to show that a fixed key of a given length can be recovered in a short amount of elapsed time. So the 56-bit key length of DES was conclusively shown to be too short in 1998 by the EFF DES Cracker device which demonstrated key recovery in 56 hours (a bit an hour!). When we have a symmetric key cipher with a fixed key length, then this is what an absolute attack usually means – key recovery in a few weeks or months (or even days) when the expected time was thousands of years. When such an attack is successful the cipher is deemed broken or we must move to a variant, or an entirely new cipher with a longer key length.

Relative Breaks

Well-designed ciphers will typically not succumb to absolute breaks (barring a breakthrough attack), but even the best ciphers have some blemishes which reduce the effort of brute forcing key recovery. By a relative break we mean an attack which is faster than exhaustive search of the key space (the set of all possible keys), but not does break the cipher in any absolute sense. Relative breaks show that the implied margin of security for the key length is not as large as we thought. But what is the implied margin of security?

When a symmetric key cipher has a key length of n bits this is interpreted to mean that, in the absence of any weaknesses, the security of the cipher is proportional to the cost of a brute force attack on a key space of size 2^{n}. That is, a needle-in-a-haystack search problem where there are of 2^{n} pieces of hay. In the worst case an attacker will have to try all possible keys to find the right one (he guesses the right key last) but on average he will need only examine half the possible keys since

So we lose one bit of security from the key length in the average case of exhaustive search. Many attacks on symmetric ciphers are of the relative type, identifying a weakness which can be translated into some computational saving over exhaustive search. Let's translate the saving into R bits over the average case of exhaustive search

The question here is how large should R be before we think a relative attack is worth worrying about?

In the case of DES the complementary property gave us R = 1 for free. In the 70's, when DES was essentially the only commercial cipher in town, shaving off just a few bits was significant. This was true because many people thought that a 56-bit key was already teetering on the brink of feasible recovery, and saving a few more bits would definitely tip the scale towards an absolute break. But while saving say 5 bits for DES was significant, the same 5 bits of cryptanalytic advantage means less for a 128-bit cipher and practically nothing for AES-256. Nothing more than a mosquito hitting a bullet train.

Relative breaks can point to small cracks in the design of a cipher which can potentially be developed into absolute breaks. This is what we are now seeing with SHA-1 where the idea behind an initial saving in the cost of finding collisions of just a few bits has been developed into serious attacks. The relative is on the verge of being converted to the absolute.

This is the period of reputational damage for a cipher, since for a given relative attack we cannot be certain if it will be converted into an absolute break over time. In February last year a new attack on the 64-bit key space of the A5/1 cipher used in GSM devices was announced, with some fanfare, at a Black Hat conference. The attack claimed to provide a relative break by pre-computing a special “rainbow table” of size 2^{58}, but the promised details have yet to materialize.

Relative breaks for AES-256

Let us now turn specifically to AES-256. AES-256 should be secure against an attacker who has materially less than 2^{255} = 10^{76} resources at their disposal. Since 10^{76} is to within a few powers of 10 of the estimated number of atoms in the known universe, then it is essentially impossible for AES-256 to succumb to pure computational attacks on its key space.

So ruling out absolute breaks (in the absence of discovering a major weakness in AES), this only leaves relative breaks for consideration. Again, how big should the relative computational saving R be before we have a serious or material relative break?

Well let’s be generous and assume that when R = 55 we have a material relative break. This means that the cryptanalyst has saved a computational effort on the order of recovering a DES key. With this saving the cryptanalyst still has 2^{255-55} = 2^{200} resources at their disposal to break an AES-256 key.

You could read the headlines now “AES-256 Broken - researcher knocks off 17 zeroes from exhaustive search”, where 2^{55} is approximately 10^{17}. But would this result really imply a meaningful threat? All that has been done is change an absolutely absurd large computational task to a slightly less absurdly large computational task.

We are ceding too much to the cryptanalyst here by a giving them a massive 2^{200} sandbox for toying around with how to break AES-256. Every scrap of current computing power in the world running for a year would amount to less than 2^{100} operations and we are postulating that our cryptanalyst has access to 2^{100} times that power, or 10^{29} centuries of continuous computation at current rates.

Something is wrong here in the analysis – no one will have 2^200 resources up their sleeve. The problem with a 256-bit key is that it can shed 55 bits and still lose no real effective security. So the reputational risk is that someone emerges from this sandbox with a 2^{200}-idea that has credence.

Managing the reputation of AES-256

Google Trends is a service which estimates search and news activity for a given keyword. For “AES 256” the graph below was produced

It is evident that there has been a considerable increase in search and news activity since 2007. The letters in the upper graph are links to news stories, which are mainly product announcements for AES-256 support. Vendors are certainly adopting AES-256, and in a previous post I wrote that

… AES-256 is being widely deployed since it conveniently lies at the intersection of good marketing and pragmatic security. In upgrading from AES-128 to AES-256 vendors can legitimately claim that their products use maximum strength cryptography, and key lengths can be doubled (thus squaring the effort for brute force attacks) for a modest 40% performance hit.

However the only possible security threat which would warrant the deployment of 256-bits keys is the existence of large scale quantum computing devices. If this were the case then there is a quantum algorithm that effectively reduces the key space to 128-bits. But if this is the case, then we will be living in a very different world from the one we inhabit now.

Imagine you posed the following question to a group of top physicists. You asked them to present you with ideas for new research projects where they could assume that the budget included all the money that we have, all the money that has ever been, and the total financial assets of the world for the next 10 million years. Would the resulting proposals be credible?

AES-256 puts cryptanalysts on the same research agenda.

Related Posts

Saturday, May 9, 2009

Password Roundup #2

Password issues remain a regular item in security news over the last few weeks. In this roundup I report on the fruits of password harvesting, a new policy from NIST, a kerfuffle between Elcomsoft and PGP, and lastly, how to pass the hash.

Unveiling Selma Hayek

Graham Cluely reported that the public email account of Selma Hayek was hacked, leading to screen shots of her messages being published along with other private details. Allegedly the hack was achieved by resetting her password after “guessing” her date of birth and the name of her most famous film role. Not exactly examples of “high entropy” information.

Fruits of the Password Harvest

Techradar reports on the exposure of The Great Password Scandal. The incident involved a site called My Name is E that lured new users with the promise to integrate multiple social networks if they handed over enough passwords. And people did, spurred by recommendations from Twitter. But these tweets were actually authored by My Name is E using harvested Twitter passwords via the autotweet function acting as a viral marketing vector. The lead developer from My Name is E claims that this was a development feature which was mistakenly left activated in the production version of the site.

A precedent is being established by well-known social networking sites to request people to supply their email username and password so that their contacts may be automatically added as friends. Sites following this approach include Get Satisfaction, Linked In, Yelp, Plaxo, Ning, FriendFeed, Orkut, iLike, MySpace and Facebook. Users are being sent the message that it’s ok to handover these credentials to simplify your social networking experience. Both Twitter and My Name is E know that OAuth is a better solution but they are not quite there yet. Joining up the social fabric web 2.0 still trumps security.

Some large scale harvesting was also reported by researchers from the University of California Santa Barbara, who infiltrated a botnet for 10 days earlier this year. The researchers have just published a paper on their findings where they report that they were able to access 70 GB of harvested data which included a massive 56,000 passwords.

NIST Password Policies

NIST has released a new draft document called Guide to Enterprise Password Management, SP 800-118. The 38-page document ¨provides recommendations for password management, which is the process of defining, implementing, and maintaining password policies throughout an enterprise. Effective password management reduces the risk of compromise of password-based authentication systems”. You can read a brief review of the document here.

Still with NIST, I recently blogged about their approach to making passwords harder to guess by using entropy arguments. At the E-Authentication site you can download a spreadsheet for calculating the entropy of explicit policies (as well as a few other interesting tools and documents).

The Elcomsoft and PGP Kerfuffle

The Register reported an incident at the recent InfoSec 2009 conference where an Elcomsoft poster with the by-line "the only way to break into PGP" was removed as a result of an official complaint lodged by PGP. Unfortunately the PGP and Elcomsoft vendor stands were facing each other in the exhibit hall. "The sign was factually inaccurate and lies about PGP," said Jon Callas, CTO of PGP. "They're not breaking into PGP, they're doing password cracking. There's a difference”. But naturally, if a password is protecting your PGP private key, then their protection is no stronger than a password. You can read more about he incident (and see the offending poster) on the Elcomsoft blog.

Pass the Hash

At the RSA 2009 conference the Register asked two security experts to rate the world’s most dangerous exploits. Near the top of the list for Ed Skoudis, a well-known security practitioner and author, was a powerful exploit that has evolved from an old attack known as pass the hash. Attackers exploit an unpatched browser or application vulnerability to capture a Windows password hash and then use it to create a valid login session on another machine with someone else’s password (for Windows only the hash of the password is required, not the password itself). For the attack to be successful the hash must be injected into memory and you can read about the details in this post from Marcus Murray.

Related Posts

Wednesday, May 6, 2009

The Half-life of Vulnerabilities is still 30 Days

Wolfgang Kandek, CTO of Qualys, recently gave an update on the Laws of Vulnerabilities research that Qualys initiated in 2004. Based on scanning 3 million IP addresses, and considering 2 million vulnerabilities, the initial results found that the half-life of unpatched vulnerabilities was 30 days. That is, the observed rate of patching halved the number of open vulnerabilities each month.

Kandek repeated this exercise on a grander scale in 2008, scanning 80 million IP addresses for over 870 million vulnerabilities, including 72 million that were critical. The data confirmed that the vulnerability half-life was 29.5 days, essentially unchanged from the initial finding 4 years before. This was an average taken over several 5 industry sectors, where the service sector had the lowest half-life at 21 days and the manufacturing sector had the highest at 51 days. The health sector weighed in at 38 days. Topping the list of the chronically under-patched were MS Office, Windows 2003 SP2, the Sun Java Plugin and Adobe Acrobat.

While the average half-life has remained essentially constant over the last 4 years, Kandek notes that the time from discovery to exploiting a vulnerability is going down. Qualys is aware of 56 zero-day exploits, and the availability of exploits is now measured in single digit days. Even though the half-life measure suggests that a given set of vulnerabilities will rapidly become “extinct”, in practice their threat lives on indefinitely since most vulnerabilities are never fully patched. Further, this patching rate is offset by a 60% replacement rate by new vulnerabilities.

Kandek concludes that

“Security is getting more difficult with attackers becoming extremely sophisticated and the window of exploitation shrinking to days for most critical vulnerabilities … Our goal with this research is to help organizations across different industries understand the broader trends, the potential for damage and the priority of vulnerabilities, so they can make more effective and more immediate decisions to protect their networks. With research like that outlined in the Laws of Vulnerabilities 2.0, we can provide the industry with a statistical look at threat trends in real-time."

Also, take a look at some recent advice from Tenable Security on how to read vulnerability reports, which will help you interpret Kandek's charts.

Sunday, May 3, 2009

Total Internet computational power = 2^{85} operations per year?

I am currently reading the ECRYPT Yearly Report on Algorithms and Keysizes for 2007-08, the last report in this European project started in 2004. The list of authors includes many well-known and respected cryptographers.

A remark on page 14 caught my eye:

In [55] it is estimated that the total computational power of the Internet is about 2^{85} operations per year.

Reference [55] is to another ECRYPT document that mentions the 2^{85} figure without justification or further reference.

Does anyone have any evidence that this 2^{85} figure is true or even approximately correct?

The $28,000 Question: Project vs. Production Risk

The average cost of an American wedding in 2007 was $28,000. Jeremiah Grossman recently posted that for the same money you could fix the critical vulnerabilities lurking at your website.

In his experience the average number of serious flaws per website is 7, each of which will take an average of 40 hours to fix - confirmed by 1000-strong Twitter poll. Then assuming a programming cost of $100/hour you arrive at the figure of

$28,000 = 7 x 40 x $100

in “outstanding insecure software debt” per website. Of course there will be sites that are in much worse shape. As Grossman observes, this figure is not very high, and he asks whether this estimate really supports the implementation of a costly secure software development life cycle ?

I think that the key point here is to distinguish between project risks and production risks. A project manager (PM) is concerned naturally with project risks, whose impact can be broadly classified as increased costs, delivery delays and reduced functionality. If we express a risk as a threat, vulnerability and an impact, then for the PM impacts reduce to cost overruns, time overruns and functionality “underruns” (plus combinations thereof). In general, expending time and resources to identify and fix potential security vulnerabilities is not effective in the PM’s risk model, since the vulnerabilities are unlikely to impact required functionality. Software with significant security vulnerabilities may function perfectly well, right up to, and including, the point of exploitation. As such, security vulnerabilities are not high on the risk radar of the PM.

When we move to the production risk model then potential impacts change dramatically, which for web applications, Grossman lists as

… down time, financial fraud, loss of visitor traffic and sales when search engines blacklist the site, recovery efforts, increased support call volume, FTC and payment card industry fines, headlines tarnishing trust in the brand, and so on are typical. Of course this assumes the organization survives at all, which has not always been the case.

The “meaningful” impact costs are therefore situated in the production risk model rather than the project risk model. A source of misunderstanding (and possibly friction) between security and project people is the difference in risk models or outlooks, since most security people assume the view of production risks – it is their role in fact. When Marcus Ranum recently remarked

I don’t know a single senior security practitioner who has not, at some point or other, had to defend an estimated likelihood of a bad thing happening against an estimated business benefit.

I believe that he was talking about the dichotomy between project and production risk. So returning the Grossman’s original issue, the $28,000 to fix web vulnerabilities does not support the deployment of a secure SDL in the project risk model, but it makes much better sense in the production risk model.

Related Posts

Saturday, May 2, 2009

The cost of SHA-1 collisions reduced to 2^{52}

Australian researchers Cameron McDonald, Philip Hawkes and Josef Pieprzyk have announced a new attack to find collisions in SHA-1 requiring only 2^{52} operations. This new result decreases the cost of a collision attack by a factor of over 2000 as compared to previous methods. The researchers note that “practical collisions are within resources of a well funded organisation”.

SHA-1 produces a 160-bit output, which according to the birthday paradox, implies that a collision attack should require approximately 2^{80} operations to succeed. However in early 2005, three Chinese researchers announced a collision attack on SHA-1 that required only 2^{69} operations. Since then a series of cryptanalytic results has weakened confidence in the strength of SHA-1 and other hash functions in the SHA family. The new attack builds on these previous results.

The 2^{52} announcement came at the informal session of the Eurocrypt 2009 conference, where works-in-progress and results completed too late for submission are discussed. The full details of the attack will be published in due course on the eprint service of the IACR.

On a personal note, Phil Hawkes was my first (and perhaps only) PhD student. He is a gifted mathematician and I am very glad to see him producing world class research results. My thanks to Eric Rescorla for posting this result on his blog.

Related Posts