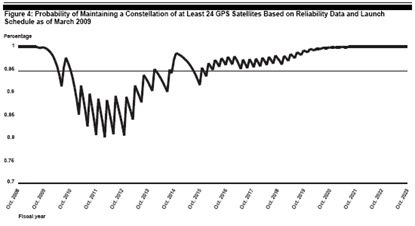

Needless to say the GAO findings have been widely discussed, and were further publicised in a recent televised congressional hearing. The US Air Force, who runs the GPS program for the DoD, has had to assure its military peers, various congressmen and an anxious public that the GPS service is in fact not on the brink of failure – a scenario not even considered by the GAO report. Articles in the popular press such as Worldwide GPS may die in 2010, say US gov from the Register are not helping matters. So how did the GPS service end up in this predicament? According to GAO, the culprit is poor risk management in the execution of the GPS modernisation program.

Needless to say the GAO findings have been widely discussed, and were further publicised in a recent televised congressional hearing. The US Air Force, who runs the GPS program for the DoD, has had to assure its military peers, various congressmen and an anxious public that the GPS service is in fact not on the brink of failure – a scenario not even considered by the GAO report. Articles in the popular press such as Worldwide GPS may die in 2010, say US gov from the Register are not helping matters. So how did the GPS service end up in this predicament? According to GAO, the culprit is poor risk management in the execution of the GPS modernisation program.Wednesday, June 24, 2009

The Risk of Degradation to GPS

Needless to say the GAO findings have been widely discussed, and were further publicised in a recent televised congressional hearing. The US Air Force, who runs the GPS program for the DoD, has had to assure its military peers, various congressmen and an anxious public that the GPS service is in fact not on the brink of failure – a scenario not even considered by the GAO report. Articles in the popular press such as Worldwide GPS may die in 2010, say US gov from the Register are not helping matters. So how did the GPS service end up in this predicament? According to GAO, the culprit is poor risk management in the execution of the GPS modernisation program.

Needless to say the GAO findings have been widely discussed, and were further publicised in a recent televised congressional hearing. The US Air Force, who runs the GPS program for the DoD, has had to assure its military peers, various congressmen and an anxious public that the GPS service is in fact not on the brink of failure – a scenario not even considered by the GAO report. Articles in the popular press such as Worldwide GPS may die in 2010, say US gov from the Register are not helping matters. So how did the GPS service end up in this predicament? According to GAO, the culprit is poor risk management in the execution of the GPS modernisation program.Tuesday, June 23, 2009

Spike in ToR Clients from Iran

As a by-product of the current turmoil in Iran and the censorship on Internet connections, there has been a dramatic increase in the number of ToR clients (connection points into the ToR network) created from Iran. Tim O'Brien at O'Reilly Radar spoke to Andrew Lewman, the Executive Directory of the Tor Project, and Lewman stated that

New client connections from within Iran have increased nearly 10x over the past 5 days. Overall, Tor client usage seems to have increased 3x over the past 5 days. There are a lot of rough numbers in these statements, and they are very conservative.You can find some additional technical details from Lewman's own post on the topic, including this graphic

Lastly, I recently recommended the Compass site for a good collection of technical documents on security, and you can find their description of an attack on ToR here.

Sunday, June 21, 2009

My ENISA Awareness presentation

Last Friday I gave a presentation at an ENISA conference on raising IT Security Awareness. I have just one idea per slide and next to no text beyond the title. You can find the slides below on Scribd.

IT Security Awareness TipsSunday, June 14, 2009

Enterprise Password Management Guidelines from NIST

Those industrious people over at NIST have produced another draft publication in the SP-800 series on Guidelines for Enterprise Password Management. At 38 pages it will be a slim addition to your already bulging shelf of NIST reports. The objective of the report is to provide recommendations for password management, including “the process of defining, implementing, and maintaining password policies throughout an enterprise”. The report is consistently sensible and, in places, quite sagely. Overall the message is that passwords are complex to manage effectively, and you will need to spend considerable time and effort on covering all the bases. My short conclusion is that a CPO – a Chief Password Officer – is required.

Let’s begin with a definition. A password is “a secret (typically a character string) that a claimant uses to authenticate its identity”. The definition includes the shorter PIN variants, and the longer passphrase variants of passwords. Passwords are a factor of authentication, and as is well-known, not a very strong factor when used in isolation. Better management of the full password lifecycle can reduce the risks of security exposures from password failures. This NIST document will help your enterprise get there.

Storage and Transmission

NIST begins by discussing password storage and transmission, since enforcing more stringent password policies on users is counterproductive if those passwords are not adequately protected while in flight and at rest. Web browsers, email clients, and other applications may store user passwords for convenience but this may not be done in a secure manner. There is an excellent article on Security Focus by Mikhael Felker from 2006 on password storage risks for IE and FireFox. In general, applications that store passwords and automatically enter them on behalf of a user make unattended desktops more attractive to opportunistic data thieves in the workplace for example. Further, as noted in the recent Data Breach Investigation Report (DBIR) from Verizon, targeted malware is not just extracting passwords from disk locations but directly from RAM and other temporary storage locations. From page 22 of the DBIR, “the transient storage of information within a system’s RAM is not typically discussed. Most application vendors do not encrypt data in memory and for years have considered RAM to be safe. With the advent of malware capable of parsing a system’s RAM for sensitive information in real-time, however, this has become a soft-spot in the data security armour”.

As NIST observes, many passwords and password hashes are transmitted over internal and external networks to provide authentication capabilities between hosts, and the main threat to such transmissions are sniffers. Sniffers today are quite sophisticated, capable of extracting unencrypted usernames and passwords sent by common protocols such as Telnet, FTP, POP and HTTP. NIST states that mitigating against sniffing is relatively easy, beginning with encrypting traffic at the network layer (VPN) or at the transport layer (SSL/TLS). A more advanced mitigation is to use network segregation and fully switched networks to protect passwords transmitted on internal networks. But let’s not forget that passwords can also be captured at source by key loggers and other forms of malware, as noted by NIST and the DBIR.

Guessing and Cracking

NIST then moves on to a discussion of password guessing and cracking. By password guessing NIST means online attacks on a given account, while password cracking is defined as attempting to invert an intercepted password hash in offline mode. Password guessing is further subdivided into brute force attacks and improved dictionary attacks. The main mitigation against guessing attacks is mandating appropriate password length and complexity rules, and reducing the number of possible online guessing attempts. Restricting the number of guesses to a small number like 5 or so is not a winning strategy.

The main mitigation against password cracking is to increase the effort of the attacker by using salting and stretching. Salting increases the amount of storage to invert a password hash by pre-computation (for example using rainbow tables), while stretching increases the time to compute a password guess. Stretching is not a standard term as far as I know, and it is more commonly referred to as the iteration count, or more simply, as password spin.

Complexity

Next is a discussion of password complexity, and the size of various combinations and length and composition rules as shown in the table below (double click to enlarge). Such computations are common, but security people normally take some pleasure in seeing them recomputed. NIST observes that in general the number of possible passwords increases more rapidly with longer lengths as opposed to permitting additional characters sets.

Of course, the table above does not take into account user bias in password selection. A large fraction of a password space may effectively contain zero passwords that will be selected by a user. And password cracking tools, like the recently upgraded LC6, made their name on that distinction. NIST briefly mentions the issue of password entropy but not in any systematic manner. There is a longer discussion on password entropy in another NIST publication. NIST does suggest several heuristics for strong password selection and more stringent criteria for passwords chosen by administrators.

Get a CPO

The NIST guidelines go on further to discuss some strategies for password reset and also provides an overview of existing password enterprise solutions. A useful glossary is presented as well. Overall the issues surrounding password management are complex and involved, and NIST gives good guidance on the main issues. It would appear that large companies which run big heterogeneous IT environments will require the services of a CPO – Chief Password Officer – to keep their password management under control and within tolerable risk limits.

Saturday, June 13, 2009

How to Choose a Good Chart

Choosing a Good Chart

Wednesday, June 10, 2009

Paper Now Available - The Cost of SHA-1 Collisions reduced to 2^{52}

Tuesday, June 9, 2009

The Long Tail of Life

In performing risk assessments we are often asked (or required) to make estimations of values. Typically once a risk is identified it needs to be rated for likelihood (how often) and severity (how bad). The ratings may be difficult to make in the absence of data or first-hand experience with the risk. We often therefore rely on “guesstimates”, calibrated against similar estimates by other colleagues or peers.

Here is a question to exercise your powers of estimation.

I recently came across an article by Carl Haub of the US Population Reference Bureau which seeks to answer the following question - How many people have ever lived? Put another way, over all time, how many people have been born? Haub says that he is asked this question frequently, and apparently there is something of an urban legend in population circles which maintains that 75% of all people who had lived were living in the 1970s. This figure sounds plausible to the lay person since we believe most aspects of the 20th century are characterised by exponential growth.

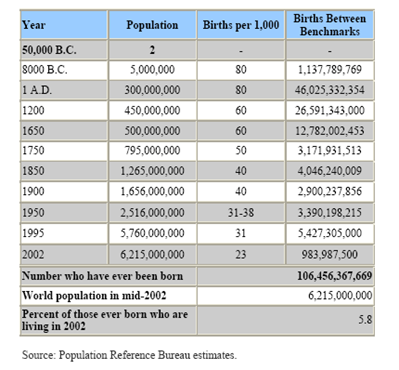

Haub sought to debunk this statement with an informed estimate. He observes that any estimate of the total number of people who have ever been born will depend basically on two factors: (1) the length of time humans are thought to have been on Earth and (2) the average size of the human population at different periods. Haub assumes that people appeared about 50,000 years ago, and from then till now he creates ten epochs (benchmarks) characterized by different birth rates

The period from 50,000 B.C. till 8,000 B.C., the dawn of agriculture, is a long struggle. Life expectancy at birth probably averaged only about 10 years for this period, and therefore most of human history. Infant mortality is thought to have been very high — perhaps 500 infant deaths per 1,000 births, or even higher. By 1 A.D. the population had risen to 300 million which represents results a meagre growth rate of only 0.0512 percent per year.

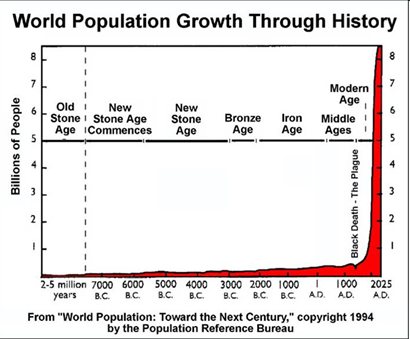

By 1650, world population rose to about 500 million, not a significant increase over the 1 A.D. estimate. Numbers were kept in check by the Black Plague which potentially killed 100 million people. By 1800 however, the world population had passed the 1 billion mark, and has increased rapidly to the current 6 or so billion.

The graph below shows another analysis which corroborates the estimates of Haub. The curve exhibits a long tail or power law with a steep increase in population from about the 18th century.

Looking at the curve above you may be tempted to believe the urban legend that 75% of all people ever born were in fact alive in the 70s. Haub in fact reports that in 2002 just under 6% of all people ever born were in fact living. Or put another way, approximately 106 billion people had been born over all time, of which 6 billion are currently living.

The key to this conclusion is the extremely high birth rate (80 per thousand) required to keep humans from becoming extinct between the period of 50,000 B.C. and 1 A.D. According to Haub there had been about 46 billion births by 1 A.D. but only a handful of people had survived. That is, the vast majority of people who have been born have also died.

How good was your estimate to the original question?

Interestingly, WolframAlpha returns the correct answer to the question. I was closer to 30% – 40% of all people being alive today, certainly no where near 6%.

Sunday, June 7, 2009

Technical overviews at Compass Security

Compass Security is a Swiss security firm specialising in ethical hacking and penetration testing. Amongst other offerings, they have a long list of freely available quality publications that present technical overviews of security attacks and tools as well as many other technologies from a security point of view. There are probably 100 or so articles published from last year back until 2004. I came across the site last year when I was researching USB security and found the Compass paper on U3 Insecurity.

Please note that some of the publications are in German only.

Saturday, June 6, 2009

Great Security Mind Maps at MindCert

I often use mind maps to organise my thoughts for longer posts, with FreeMind being my tool of choice, some of which I have published in the FreeMind gallery using their Flash plug-in.

I found some great security mind maps at MindCert, a company committed to “bring[ing] you the best in Mind Mapping with a view to gaining knowledge in the IT Industry and passing IT Certifications from Cisco, CISSP, CEH and Others”. The MindCert homepage currently has mind maps for Certified Ethical Hacking, Cracking WEP with Backtrack 3 and Cryptography for CISSP (plus others)

Googling “security mind maps” brings up a wealth of links. So it seems many people are trying to understand security in terms of mind maps.

Thursday, June 4, 2009

A Risk Analysis of Risk Analysis

The title of this post is taken from a both sobering and sensible paper published last year by Jay Lund, a distinguished professor of civil engineering at the University of California (Davis), who specialises in water management. The paper presents a discussion of the merits of Probabilistic Risk Assessment (PRA), which is a “systematic and comprehensive methodology to evaluate risks associated with a complex engineered technological entity”. PRA is notably used by NASA (see their 320 page guide) as well as essentially being mandated for assessing the operational risks of nuclear power plants during the 80’s and 90’s.

Professor Lund’s views are derived from his experiences in applying PRA to decision-making and policy-setting for engineering effective water management, as well as from teaching PRA methods. His paper starts with two propositions: (1) PRA is a venerated collection of mathematically rigorous methods for performing engineering risk assessments, and (2) PRA is rarely used in practice. Given the first proposition he seeks to provide some insight into the “irrational behaviour” that has lead to the second proposition. Why don’t risk assessors use the best tools available?

Discussions on the merits of using modeling and quantitative risk analysis in IT Security flare up quite regularly in the blogosphere. Most of the time the discussions are just storms in HTML teacups – the participants usually make some good points but the thread rapidly peters out since both the detractors and defenders typically have no real experience or evidence to offer either way. So you either believe quant methods would be a good idea to use or you don’t. With Lund we have a more informed subject who understands the benefits and limits of a sophisticated risk methodology, and has experience with its use in practice for both projects and policy-setting.

Know Your Decision-Makers

After a brief introduction to PRA, Lund begins by providing some anecdotal quotes and reasoning for PRA being passed over in practice.

People would rather live with a problem that they cannot solve than accept a solution that they cannot understand.

Decision-makers are more comfortable with what they are already using. As I was once told by a Corps manager, “I don’t trust anything that comes from a computer or from a Ph.D.”

“Dream on! Hardly anyone in decision-making authority will ever be able to understand this stuff.”

PRA is too hard to understand. While in theory PRA is transparent, in practical terms, PRA is not transparent at all to most people, especially lay decision makers, without considerable investments of time and effort.

So the first barrier is the lack of transparency in PRA to the untrained, who will often be the decision-makers. There is an assumption here that risk support for decisions under uncertainty can be provided in the form of concise, transparent and correct recommendations – and PRA is not giving decision makers that type of output. But I think in at least some cases this expectation is unreasonable. For some decisions there will be a certain amount of inherent complexity and uncertainty which cannot be winnowed away for the convenience of presentation. I am not sure, for example, to what extent the risks associated with a major IT infrastructure outsourcing can be made transparent to non-specialists.

The next few comments from Lund are quite telling.

People who achieve decision-making positions typically do so based on intuitive and social skills and not detailed PRA analysis skills.

Most decisions are not driven by objectives included in PRA. Decision-makers are elected or appointed. Being re-elected can be more important than being technically correct on a particular issue. Empirical demonstration of good decisions from PRA is often unavailable during a person’s career.

So decision-makers are usually not made decision-makers based on their analytical skills, and what motivates such people may well be outside of the scope of what PRA considers “useful” decision criteria. Actually developing a methodology tailored to solving risk problems, in isolation to the intended decision-making audience, is counter-productive.

And here is the paradox as I see it

A poorly-presented or poorly-understood PRA can raise public controversy and reduce the transparency and credibility of public decisions. These difficulties are more likely for novel and controversial decisions (the same sorts of problems where PRA should be at its most rigorous).

So for complex decisions that potentially have the greatest impact in terms of costs and/or reputation, in exactly the circumstances where a thorough risk assessment is required, transparency rather than rigour is the order of the day.

Process Reliability

Lund notes that PRA involves a sequence of steps that must each succeed to produce a reliable result. Those steps are problem formulation, accurate solution to the problem, correct interpretation of the results, and then proper communication of the results to stakeholders or decision-makers. In summary then we have four steps: formulation, solution, interpretation and communication. He asks

What is the probability that a typical consultant, agency engineer, lay decision-maker, or even a water resources engineering professor will accurately formulate, calculate, interpret, or understand a PRA problem?

He makes the simple assumption that the probability of each step succeeding is independent, which he justifies by saying that the steps are often segregating in large organizations. In any case, he presents the following graph which plots step (component) success to overall success.

Lund describes this as a sobering plot since it shows that even with a 93% success at each step then the final PRA is only successful with 75%. When the step success is only 80% then the PRA success is just 41% (not worth doing). We should not take the graph as an accurate plot but rather to show the perhaps non-intuitive relation between step (component) success and overall success.

A Partial PRA Example

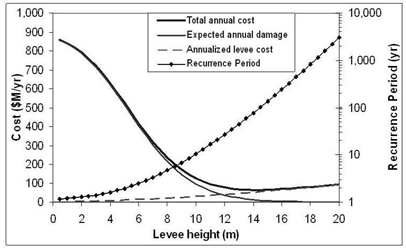

Lund also describes an interesting example of a partial PRA, where deriving a range of solutions likely to contain the optimal solution to support decision-making is just as helpful as finding the exact optimal solution. The problem he considers is straightforward: given an area of land that has a fixed damage potential D, what is the risk-based optimal height of a levee (barrier or dyke) to protect the land which minimizes expected annual costs? The graph below plots the annual cost outcomes across a wide range of options.

There are three axes to consider – one horizontal (the levee height), a left vertical (annual cost) and a right vertical (recurrence period). Considering the left vertical at a zero height levee (that is, no levee), total annual costs are about $850 million or the best part of a billion dollars damage if left unaddressed. Considering the right vertical, for a 20m levee, costs are dominated by maintaining the levee and water levels exceeding the levee height (called an overtopping event) are expected less than once per thousand years.

The recurrence period states that the water levels reaching a given height H will be a 1-in-T year event, which can also be interpreted as the probability of the water level reaching H in one year is 1/T. For a levee of less than 6m in height there is no material difference between the total cost and the cost of damage, which we can interpret as small levees being cheap and an overtopping event likely.

At 8m - 10m we start to see a separation between the total and damage cost curves, so that the likelihood of an overtopping event is decreasing and levee cost increasing. At 14m, levee costs are dominant and the expected annual damage from overtopping seems marginal. In fact, the optimal solution is a levee of height 14.5m, yielding a recurrence period for overtopping of 102 years. Varying the levee height by 1m around the optimal value (either up or down), gives a range of $65.6 - $66.8 million for total annual costs. Lund makes some excellent conclusions from this example

a) Identifying the range of promising solutions which are probably robust to most estimation errors,

b) Indicating that within this range a variety of additional non-economic objectives might be economically accommodated, and

c) Providing a basis for policy-making which avoids under-protecting or over-protecting an area, but which can be somewhat flexible.

I think that this is exactly the type of risk support for decision-making that we should be aiming for in IT Risk management.

Last Remarks

The paper by Professor Lund is required reading at only 8 pages. PRA he surmises can be sub-optimal when it has high costs, a potentially low probability of success, or inconclusive results. His final recommendation is to reserve its use to situations involving very large expenditures or having very large consequences, large enough to justify the kinds of expenses needed for a PRA to be reliable. Note that he does not doubt PRA can be reliable but you really have to pay for it. In IT risk management I think we have more to learn from Cape Canaveral and Chernobyl than from Wall Street.

Wednesday, June 3, 2009

Another vote of confidence for Whitelisting

McAfee recently announced its successful acquisition of Solidcore Systems, a provider of dynamic whitelisting technology. McAfee now claims to have “the first end-to-end compliance solution that includes dynamic whitelisting and application trust technology, antivirus, antispyware, host intrusion prevention, policy auditing and firewall technologies” .

I recently posted on the undeniable logic of whitelisting. Malware writers are shifting away from the mass distribution of a small number of threats to the micro distribution of millions of targeted threats. This strategy is eroding the effectiveness of classic malware detection through signature analysis. As Edward Brice of Lumension remarks, the “block and tackle” approach of blacklisting is not sustainable.

Partially to defuse whitelisting hype, and also to preserve current revenue models, we can expect to see a transition towards hybrid offerings that combine black- and whitelisting, such as the teaming up of Bit9 and Kaspersky. McAfee states that

As businesses seek to more easily mitigate the risks associated with vulnerable or malicious applications downloaded by employees, they will extend beyond signature-based anti-malware with the addition of dynamic whitelisting and application trust technology.

Whitelisting is poised to become the protagonist in the cast of end-point security technologies.

Tuesday, June 2, 2009

The Boys are Back in Town – the return of L0PHTCRACK

The famous password cracking tool L0PHTCRACK (or LC for short) is now back on the market, upgraded to LC6. The tool was acquired by Symantec as part of its purchase of @Stake in 2004, who had developed the tool for audit and consulting engagements.

Symantec ended formal support for LC in 2005. However, a clause in the acquisition contract gave the developers an option to reacquire the tool in such circumstances, and several former members of the hacker high priest group L0pht Heavy Industries have exercised that option. The new site for LC6 is here, where it is marketed as a password audit and recovery tool.

The basic version of LC6 at $295 (called Professional) includes password assessment & recovery, dictionary and brute force support, international character sets, password quality scoring, Windows & Unix support, remote system scans and executive reporting. The Professional version supports up to 500 accounts. Other versions at double or four times the cost support an unlimited number of accounts, installed on multiple machines.

Note that LC6 is subject to United States export controls.

Monday, June 1, 2009

Going, Going … Gone! Auctioning IT Security Bugs

One day in 1947 the Mark computer at the Harvard Computer Laboratory failed, and the cause was traced back to a bug (specifically a moth) found in one of its electromechanical relays. It was already common for engineers to attribute inexplicable defects to “bugs in the system”, but the Mark team had now found real evidence of one of these mythical bugs. The bug was taped into the Mark log book entry for posterity, and soon all computer defects were referred to as bugs. While the Mark story has passed into computer folklore, the bugs themselves have not.

Most bugs today occur in software, and part of the reason for this is sheer size. Windows XP has 40 million lines of code, which if printed out on A4 paper would stretch for over 148 kilometres when laying the pages end-to-end. If you wanted to check Windows Vista, you would have a further 37 kilometres of reading, and a further 180 kilometres for the Mac operating system. When software becomes this large and complex it is not surprising that some bugs are introduced.

Security bugs, also referred to as vulnerabilities, potentially permit an attacker to bypass security mechanisms and gain unauthorized access to system resources. Security bugs normally do not interfere with the operation of software and typically only become an issue when a bug is found and further exploited in an attack. The Zotob worm that infected many high profile companies in late 2006 exploited a vulnerability that had been undetected since the release of Windows 2000. In May 2008 a 25-year old bug in the BSD file system was discovered and fixed.

How are vulnerabilities found? Well software vendors uncover many bugs themselves, but a significant number of vulnerabilities are also found by third parties such as security software specialists, researchers, independent individuals and groups, and of course, hackers. Software vendors encourage these bug hunters to report their findings back to them first before going public with the vulnerability, so that an appropriate patch can be prepared to limit any potential negative consequences. In the computer security industry this is called “responsible disclosure”. For bug hunters that abide by this principle, the software vendors are normally willing to give kudos for finding vulnerabilities, and in some cases, also provide a nominal financial reward.

How are vulnerabilities found? Well software vendors uncover many bugs themselves, but a significant number of vulnerabilities are also found by third parties such as security software specialists, researchers, independent individuals and groups, and of course, hackers. Software vendors encourage these bug hunters to report their findings back to them first before going public with the vulnerability, so that an appropriate patch can be prepared to limit any potential negative consequences. In the computer security industry this is called “responsible disclosure”. For bug hunters that abide by this principle, the software vendors are normally willing to give kudos for finding vulnerabilities, and in some cases, also provide a nominal financial reward.

Over time bug hunters have started to become more interested in the financial reward part rather than the kudos. For example, in August last year a lone security researcher was asking €20,000 for a vulnerability he had found in a Nokia platform. Was this a good deal? What monetary value do you place on finding such a vulnerability? Software vendors want to produce patches for vulnerabilities in their products as soon as possible, while hackers want to produce code exploiting a known vulnerability as soon as possible. Considering these alternatives, how much should the bug hunter be paid for his or her services? Are low financial rewards from software companies driving bug hunters into the arms of hackers? Perhaps a market could efficiently create a fair price.

Charlie Miller certainly thinks so. The ex-NSA employee described his experiences in selling vulnerabilities in an interesting paper, and you can read a quick interview at Security Focus. Miller found it hard to set a fair price and had to rely significantly on his personal contacts to negotiated a sale – contacts that are likely not to be available to most security researchers.

In 2007 a Swiss company called WSLabi introduced an online marketplace where security bugs can be advertised and auctioned off to the highest bidder. This latest experiment in security economics appears to have lasted about 18 months and the WSLabi site is now unavailable. The marketplace started running in July 2007, and in the first two months 150 vulnerabilities were submitted and 160,000 people visited the site. Of those sales that have been publicly disclosed, the final bids have been between 100€ to 5100€. An example list of advertised vulnerabilities on the marketplace is shown below.

The WSLabi marketplace was controversial since many security people believe that hackers, or more recently, organized digital crime, should not have early access to vulnerabilities. In 2005 eBay accepted a critical vulnerability in Excel as an item to be auctioned, but management quickly shut down the auction saying that the sale would violates the site's policy against encouraging illegal activity. "Our intention is that the marketplace facility on WSLabi will enable security researchers to get a fair price for their findings and ensure that they will no longer be forced to give them away for free or sell them to cyber-criminals," said Herman Zampariolo, head of the auction site. In another interview Zampariolo explains that "The number of new vulnerabilities found in developed code could, according to IBM, be as high as 140,000 per year. The marketplace facility on WSLabi will enable security researchers to get a fair price for their findings and ensure that they will no longer be forced to give them away for free or sell them to cyber-criminals" .

Unfortunately the marketplace experiment was unexpectedly cut short. In April 2008 the WSLabi CEO Roberto Preatoni blogged about his regrettable arrest and the damage this had on the trust of WSLabi as a broker, notwithstanding being Swiss! Six months later in a public interview Preatoni remarked that "It [the marketplace] didn't work very well … it was too far ahead of its time". Apparently security researchers recognised the value of having an auction site like WSLabi, but very few buyers proved willing to use the site. Buyers seemed to value their privacy more than they value a fair price. Preatoni is now directing his company’s efforts to using zero-day threat information for the development of unified threat hardware modules – which you can read about on the last WSLabi blog post from October 2008.